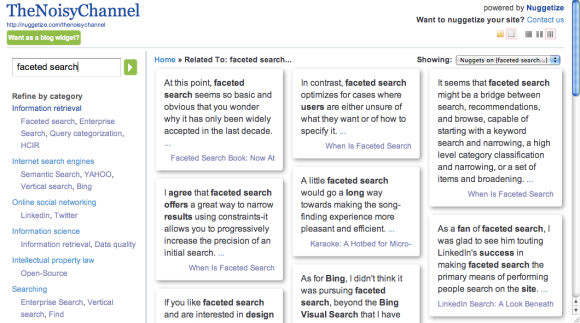

I’ve been exchanging emails with Dhiti co-founder Bharath Mohan about Nuggetize, an intriguing interface that surfaces “nuggets” from a site to reduce the user’s cost of exploring a document collection. Specifically Nuggetize targets research scenarios where users are likely to assemble a substantial reading list before diving into it. You can try Nuggetize on the general web or on a particular site that has been “nuggetized”, e.g., a blog like this one or Chris Dixon’s.

I’m always happy to see people building systems that explicitly support exploratory search (and am looking forward to seeing the HCIR Challenge entries in a week!). Regular readers may recall my coverage of Cuil, Kosmix, and Duck Duck Go. And of course I helped build a few of my own at Endeca. So what’s special about Nuggetize?

Mohan describes it as a faceted search interface for the web. I’ll quibble here–the interface offers grouped refinement options, but the groups don’t really strike me as facets. Moreover, the interface isn’t really designed to explore intersections of the refinement options–rather, at any given time, you see the intersection of the initial search and a currently selected refinement. But it is certainly an interface that supports query refinement and exploration.

The more interesting features are the nuggets and the support for relevance feedback.

The nuggets are full sentences, and thus feel quite different from conventional search-engine snippets. Conventional snippets serve primarily to provide information scent, helping users quickly determine the utility of a search result without the cost of clicking through to it and reading it. In contrast the nuggets are document fragments that are sufficiently self-contained to communicate a coherent thought. The experience suggests passage retrieval rather than document retrieval.

The relevance feedback is explicit: users can thumbs-up or thumbs-down results. After supplying feedback, users can refresh their results (which re-ranks them) and are also presented with suggested categories to use for feedback (both positive and negative). Unfortunately, the research on relevance feedback tells us that, helpful as it could be to improving user experience, users don’t bite. But perhaps users in research scenarios will give it a chance–especially with the added expressiveness and transparency of combining document and category feedback.

Overall it is a slick interface, and it’s nice seeing the various ideas Mohan and his colleagues put together. There’s certainly room for improvement–particularly in the quality of the categories, which sometimes feel like victims of polysemy. Open-domain information extraction is hard! Some would even call it a grand challenge.

Mohan reads this blog (he reached out to me a few months ago via a comment), and I’m sure he’d be happy to answer questions here.

23 replies on “Exploring Nuggetize”

Thanks Daniel, for a very fair review. We are very eager to read feedback on our system.

A few suggested uses:

1) Try “Get nuggets for a feed”, and drop a url, like Paul Graham’s latest on Yahoo! [http://www.paulgraham.com/yahoo.html]

2) Try “Get nuggets for a topic”, and drop a topic, like [Faceted Navigation]. A query like [HCIR] or [Jaguar] is a good candidate to test out our relevance feedback.

3) Try “Get nuggets for a feed”, and drop an RSS feed you follow often, [http://feeds.feedburner.com/venturebeat]

The categories get better with more documents. The nuggets are decent starting from 1 document in your collection.

Just in case you plan to “Publish” the nuggets you create, you can use a token “betaone” when prompted.

Bharath

LikeLike

Oops… small mistake in my comment.

The first use case should be:

1) Try “Get nuggets for a page”, and drop a url, like Paul Graham’s latest on Yahoo! [http://www.paulgraham.com/yahoo.html]

Nuggetize can also be used to get the most relevant nuggets for a page.

LikeLike

Bharath, I see those suggestions on your site as well; they’d be much easier as samples to click on. Something like this.

LikeLike

Unfortunately, the research on relevance feedback tells us that, helpful as it could be to improving user experience, users don’t bite.

Yeah, wasn’t all that research done for web users, at a time when the unwashed masses were just beginning to come online and were more interested in navigational search than anything else? In other words, the research was done on the wrong target audience, was it not?

It’s a bit of a stretch to go from AOL-like users, to general researchers.

LikeLike

Jeremy: hence the next sentence in that paragraph! But is there any research showing that some class of users takes advantage of relevance feedback when it’s available? I’d think that researchers would be an ideal test case.

LikeLike

Relevance feedback has diminishing returns if the information is consumed just once. However, if the user is creating a reusable body of knowledge – for a related audience – relevance feedback pays back.

For example, if I have to create a body of knowledge on Service Oriented Architecture, I can use relevance feedback to prioritize the best articles, the best categories, weed out the noise, and then publish them to my community. Here’s an example of that: http://nuggetize.com/service-oriented-architecture

Our relevance feedback is meant for use by an expert in the area, who does this one time training, and maintains it – but a larger community benefits.

LikeLike

That’s a good point–I hadn’t thought of the social / collaborative aspect. Indeed, when I was looking at Blekko’s user-created slashtags, I was hoping for something more like this. Of course, there’s a trade-off between expressivity and transparency. But in this case I’m willing to give up a little bit of the latter for a lot of the former.

LikeLike

Jeremy: hence the next sentence in that paragraph!

Ok, ok, true. I guess I was questioning more the veracity of the previous four sentences 🙂

But is there any research showing that some class of users takes advantage of relevance feedback when it’s available? I’d think that researchers would be an ideal test case.

Relevance feedback is used all the time in information retrieval systems. Just not in web search engines. Take Pandora for example. Thumbs up/down is relevance feedback. And I don’t know of any published work on how often it gets used, but it is available, and I would bet horses to hedgehogs that it does get used a significant amount of the time.

LikeLike

Great point re: Pandora — and of course Netflix. Though both have the advantage that the feedback has long-term value far beyond a single session. And I suppose I’d distinguish taste feedback from relevance feedback, though I realize that’s a slippery slope (e.g., a Pandora station is a sort of continuous query).

Still, I think you know what I mean. I didn’t see much emphasis on relevance feedback when I was in the enterprise search world, and it’s fared even worse in the web search. That’s despite the lab evidence that it would work if users tried it. So I’d like to understand the conditions under which users opt to use relevance feedback mechanisms–beyond the obvious one of their being available.

LikeLike

So I’d like to understand the conditions under which users opt to use relevance feedback mechanisms–beyond the obvious one of their being available.

I think the answer is “exploratory search”.

Exploratory search actually covers two types of approaches: Data-exploration, and process-exploration. Data-exploratory, to me, means something like faceted search. Process-exploratory, on the other hand, is not (necessarily) a function of the system as much as it is a function of the user. For process-exploratory, let’s go back to Marchionini’s 2006 CACM article:

‘Most people think of lookup searches as “fact

retrieval” or “question answering.” In general, lookup

tasks are suited to analytical search strategies that

begin with carefully specified queries and yield precise

results with minimal need for result set examination

and item comparison. Clearly, lookup tasks have been

among the most successful applications of computers

and remain an active area of research and development.

However, as the Web has become the information

resource of first choice for information seekers,

people expect it to serve other kinds of information

needs and search engines must strive to provide services

beyond lookup.

Searching to learn is increasingly viable as more primary

materials go online. Learning searches involve

multiple iterations and return sets of objects that

require cognitive processing and interpretation. These

objects may be instantiated in various media (graphs,

or maps, texts, videos) and often require the information

seeker to spend time scanning/viewing, comparing,

and making qualitative judgments. Note that

“learning” here is used in its general sense of developing

new knowledge and thus includes self-directed

life-long learning and professional learning as well as

the usual directed learning in schools. Using terminology

from Bloom’s taxonomy of educational objectives,

searches that support learning aim to achieve:

knowledge acquisition, comprehension of concepts or

skills, interpretation of ideas, and comparisons or

aggregations of data and concepts.

So it is like you say.. when there is long term value beyond a single session, relevance feedback works. But exploratory search is exactly that.. at least process-exploratory search. Learning-oriented search, of the kind that Marchionini describes.

Did enterprise search users have a need to learn? Or were they also just doing lookup search, albeit in an environment without hyperlinks?

LikeLike

I think we agree on where relevance feedback is useful. What isn’t clear is where it is used, outside of recommender systems like Pandora and Netflix.

For example, does anyone use explicit relevance feedback in real e-discovery systems (and not just TREC experiments)? Seems like a natural fit, since part of the workflow is to mark documents as relevant and irrelevant.

LikeLike

[…] Otro artículo destacado es el proporcionado por el blog The Noisy Channel titulado “Exploring Nuggetize”. […]

LikeLike

Youtube has a thumbs up/down button, i.e. explicit relevance feedback. Lexis nexis also provides this feature, I believe, though only with one document at a time rather than multiple. Surf canyon does, too: http://en.wikipedia.org/wiki/Surf_Canyon

And Facebook has an explicit “like” button, which I don’t know for sure but would wager pretty good odds that gets used to dynamically affect the stream of information in your life feed. I.e. explicit relevance feedback.

That’s just from a 30 second search. I’m sure I could turn up more.

But even if I couldn’t.. I see this as one of the grand challenges of HCIR. We know on the IR side that relevance feedback is highly effective. And yet we’re not quite sure of the best way to get the user involved. I don’t buy the long-standing excuse that it’s just laziness. I think some of it is the wrong type of need (e.g. navigational) in some circumstances. But as we discussed above, there are plenty of other known circumstances in which it is desirable. So what we really need now is the HCI side of HCIR, to integrated with the known-effectiveness IR relevance feedback bit.

LikeLike

dynamically affect the stream of information in your life feed

I mean, in your live feed.

LikeLike

I believe that YouTube and Facebook’s feedback mechanisms, like Amazon’s and eBay’s, primarily serves as a signal to others–I’d call these ratings/reviews rather than relevance feedback.

Surf Canyon is interesting, but the closest thing I see to explicit relevance feedback is a “bull’s eye” link that seems to act like the “Similar” link on Google. Indeed, they seem more interested in implicit relevance feedback.

But I agree with you that this is a grand challenge of HCIR–and I think we’re essentially making the same argument. I also don’t think it’s user laziness. Rather, I think users are rightfully skeptical and risk-averse–especially of black-box approaches that can yield seemingly random results.

Even done right, I’m skeptical as to whether relevance feedback belongs on a general web search engine where it only helps a small fraction of information needs. There are other HCIR approaches I’d be inclined to try first. But there’s a much stronger case for it in other contexts, such as research and eDiscovery.

LikeLike

Really? You see Facebook “like” items primarily as signaling others, rather than as providing input to my own stream/filter?

If true, wow.. I really don’t like that.

LikeLike

The Like button lets a user share your content with friends on Facebook. When the user clicks the Like button on your site, a story appears in the user’s friends’ News Feed with a link back to your website.

http://developers.facebook.com/docs/reference/plugins/like

LikeLike

Also from that same page that you just linked to:

This means when a user clicks a Like button on your page, a connection is made between your page and the user. Your page will appear in the “Likes and Interests” section of the user’s profile, and you have the ability to publish updates to the user. Your page will show up in same places that Facebook pages show up around the site (e.g. search), and you can target ads to people who like your content.

In other words, when I the user “like” something, that allows a process on the other end (whether manual or automatic) to start filling my feed with content and ads that are more targeted to that user. So it’s not just that “like” lets me signal something to my friends. It’s that the things I “like” can start altering the information that I see.

From the perspective of the user, that looks an awful lot like relevance feedback.

See also this:

The article claims that a comment (implicit relevance feedback) receives more weight than a “like” (explicit relevance feedback), but I have to note two things: (1) the “like” does appear to be part of the overall algorithm for determining a user’s own News Feed, and (2) Facebook might not be telling the whole story/truth about how important “like” is. That doesn’t necessarily mean that it’s important. But we do know that it’s in the mixture, i.e. you the user can influence your News Feed by using the like button.

See also:

http://teachtofishdigital.com/facebook-news-feed-optimization/

According to the Facebook consultants at BrandGlue, 1 in 500 updates make it to the news feed. How does it work? If you like or comment on updates from one particular friend quite often, you are likely to see that person’s update in your news feed on a regular basis. To use a personal example, I tend to like or comment on updates from my friend Juan because he is hilarious, my sister who is expecting her first baby, and Arizona Highways’ page because they are always providing great content. Because of my tendency to do so, my personal news feed usually includes an update from one if not all of those profiles.

Read further on down, and it talks about the score being a combination of three factors: affinity, weight, and recency. The weight factor appears to be what you’re talking about.. the signals (likes) from other people. But the affinity factor is the part you control.. your connection to that person, based on comments and, yes, likes. The more you like something from someone, the more of that person’s information you will see, all else equal.

That’s pretty much relevance feedback, non? It’s relevance feedback on people, rather than on documents. But this is a social network, after all. It’s still explicit relevance feedback.

LikeLike

Points taken. Next time I’ll do my homework! But I am curious how users see it. Relevance feedback isn’t usually a public / social act. Though perhaps all of our online interactions are becoming more public and social.

LikeLike

Naw, given that the facebook like has both a public and a private relevance feedback component within the very same action, I think you’re probably still correct, in that the main understanding that users have of the “like” button is not traditional relevance feedback, even though that is indeed part of what it gets used for. I wonder, though, if they also understand that it has a propagation component to it, too. Do users have a mental model of either application?

LikeLike

Maybe Max Wilson can tell us. Check out his HCIR 2010 paper on “Casual-leisure Searching: The Exploratory Search Scenarios that Break our Current Models “.

LikeLike

[…] August, I wrote “Exploring Nuggetize“, in which I described an interface that Dhiti co-founder Bharath Mohan developed […]

LikeLike

[…] back linking to his earlier write up about information nuggets. You may want to take a look at “Exploring Nuggetize”. The illustration shows how the “nugget” method converts Noisy Channel articles into what […]

LikeLike